Automation use for custom Azure reporting

We hear lots of talks that we should automate everything we can and, being honest, we have never been in a better position to leverage this practice. We live in times when almost everything is exposed via well-defined APIs, infrastructure can be defined as code, we have different serverless compute options and much more. But once we look practically for the things that still can be automated the potential is enormous. Why is that?

In my view, there is still lack of common knowledge of the latest automation tooling capabilities, that many more of us, working in IT, could map our manual work and processes to the technical automation implementations. The other missing success factor is our courage to get our hands dirty by writing that automation code.

I am not saying that coding is for everyone, for those higher up my suggestion would be to raise the bar by showing genuine interest to understand the practical automation level in your IT organizations, invest in education and set measurable goals to increase automation usage.

I am going to describe a basic automation example that could ease some of Azure related reporting tasks. The solution I am describing has several commonly used automation techniques, that can be easily customized to meet different scenarios. Hopefully this can give some ideas how similar solutions can be built by many of us to meet our own needs.

Description of task I will automate

On demand report that will show all new specific Azure tag values compared to the date we give.

Solution

There are different ways to solution this, I have chossen to write my automation code in PowerShell and will build solution using following solution compoenents: Azure Functions, Azure Automation, Blob storage and Azure Graph API. I will expose a RESTful API, for triggering this on-demand report via a web URL. When triggering the report, the date to compare against will have to be passed in the URL. It is obvious that it is possible to go a step further and build a nice-looking web frontend to mask this RESTful API with a more user-friendly interface, but I need to work on a reasonable scope, to make this post digestible ?

For sure a lot of improvements can be done to the code itself, including more extensive error handling, enabling logging and so on, but I will stick to my goal to deliver a working solution with the purpose of educating wider audience in the core automation techniques and capabilities.

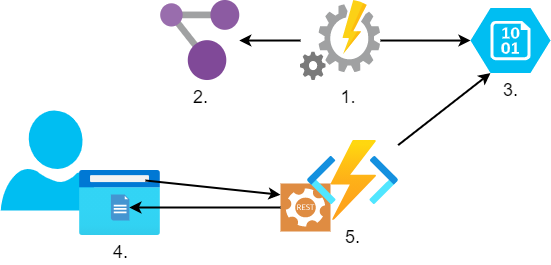

Logical solution diagram:

- We will schedule a daily Azure Automation runbook, to generate all unique specific tag values. In my example the specific tag key is “costcenter”.

- File generation will be based on Azure Graph query results.

- We will store the output file to Azure Blob storage.

- User will be presented with web URL, to invoke a REST call with GET method for triggering the function that will output the report to the web browser’s screen.

- Azure function, when triggered, will generate the report content by comparing the two unique, in my case “costcenter”, tag lists. It will compare the latest list available with the list from the date specified in the web call.

Solution implementation

It is quite difficult to describe every solution detail, but I will do my best to pass the most important information and will pinpoint the trickier parts to be considered to enable smooth replication of the solution, if wanted.

We should start by deploying Azure resources needed for the solution. Unfortunately, I have not written ARM or Terraform code for the Azure infrastructure implementation (might do that, if I will find time in the future). So, you will have to create:

- Storage Account, with a container. This container will be used to store our daily unique costcenter tag lists.

- Azure Automation Account. It will be used to run our daily PowerShell runbook, which will run an Azure Graph API query and output the results to a file in the storage container we created earlier, setting the current date (YY-MM-DD) as the file name.

Good to know that you might need to import few additional AZ modules to the automation account that our runbook could run all the commands.

- Azure Function App which will host our PowerShell function. Our function will be listening for a web API call, to run the comparison and output results as web API call response.

Tips: Use the PowerShell Core runtime stack, serverless plan, create system assigned identity for the function and give it access to the storage container.

Once we have our Azure resources deployed, we can start developing our automation code. Probably good next step would be to write our Azure Graph Query to list the unique tag values. Azure Graph API is very fast and powerful tool when you need to generate reports from your Azure environment. Querying basic things is quite straightforward and could save lots of time! If you have not tried it, I highly recommend playing with it, I can guarantee that you will be nicely surprised ?

Service is available on https://portal.azure.com/#blade/HubsExtension/ArgQueryBlade

Query we need looks following:

Resources

| where isnotempty(tags.costcenter)

| extend costcenter = tostring(tags.costcenter)

| distinct costcenter

| project costcenter

The trickier part with the query above is that we need to include the two middle rows to remove the duplicate entries, whenever query list multiple objects with the same tag key value.

Once we have our Azure Graph API query, we can work on our code for the runbook which will run this query and will write output a new file to be stored in blob storage, setting the current date as the file name.

##################################################################

# Variables

##################################################################

$connectionName = "AzureRunAsConnection"

$resourceGroupName = 'rgCostcenterReporting'

$storageAccountName = 'sacostcenterreporting'

$blobContainer = 'costcenterreports'

$blobName = "$(get-date -f yyyy.mm.dd).txt"

$outputTempPath = $env:temp

##################################################################

# Runbook

##################################################################

$ErrorActionPreference = 'stop'

#Connecting to Azure

try

{

# Get the connection "AzureRunAsConnection"

$servicePrincipalConnection=Get-AutomationConnection `

-Name $connectionName

"Logging in to Azure..."

Connect-AzAccount `

-ServicePrincipal `

-TenantId $servicePrincipalConnection.TenantId `

-ApplicationId $servicePrincipalConnection.ApplicationId `

-CertificateThumbprint `

$servicePrincipalConnection.CertificateThumbprint

"Logged in to Azure successfully."

}

catch {

Write-Error "Loggin to Azure failed"

}

<#Running Azure Resource Graph query to list out all unique "costcenter" tag key

values, with output to local file #>

$GraphSearchQuery = "Resources

| where isnotempty(tags.costcenter)

| extend costcenter = tostring(tags.costcenter)

| distinct costcenter

| project costcenter"

try {

$resources = Search-AzGraph -Query $GraphSearchQuery

Write-Output $resources | out-file `

-FilePath "$($outputTempPath)\$blobName"

}

catch {

Write-Error "Failure running Search-AzGraph"

}

#Upload temp output to blob storage

try {

$storageAccount = Get-AzStorageAccount -ResourceGroupName `

$resourceGroupName -Name $storageAccountName

$storageContext = $storageAccount.Context

"Storage context loaded successfully"

}

catch {

Write-Error "Failure getting storage context"

}

try {

Set-AzStorageBlobContent -File "$($env:temp)\$blobName" `

-Container $blobContainer `

-Blob "$blobName" `

-Context $storageContext

"File uploaded to blob successfully"

}

catch {

Write-Error "Failed to upload file to blob"

}

The final and most complex step of this solution is to develop an Azure Function that would select the two lists for comparison (the latest one and the specific date list as per inputs from the web trigger), compare the lists and form a nice web friendly output.

using namespace System.Net

# Input bindings are passed in via param block.

param($Request, $TriggerMetadata)

# Write to the Azure Functions log stream.

Write-Host "PowerShell HTTP trigger function processed a request."

# Interact with query parameters or the body of the request.

$OldListDate = $Request.Query.OldListDate

if (-not $OldListDate) {

$OldListDate = $Request.Body.OldListDate

}

$htmlBody = "This HTTP triggered function executed successfully.

Pass a date in the query to get the report of new CCs added

to Azure since that date. Append the date paramter to the

end of the URL e.g: &OldListDate=2021.01.15"

if ($OldListDate) {

#The function logic body when date parameter passed

$ErrorActionPreference = 'stop'

$resourceGroupName = 'rgCostcenterReporting'

$storageAccountName = 'sacostcenterreporting'

$blobContainer = 'costcenterreports'

$temppath = $env:temp

try {

$storageAccount = Get-AzStorageAccount `

-ResourceGroupName $resourceGroupName -Name $storageAccountName

$storageContext = $storageAccount.Context

Write-Host "Storage context loaded successfully"

}

catch {

Write-Error "Failure getting storage context"

}

#Identify and load latest list

try {

$LatestList = Get-AzStorageBlob -Container $blobContainer `

-Context $storageContext | Sort-Object LastModified `

| Select-Object -last 1

$BaseLatestFileName = $LatestList.name.substring(0,$LatestList.name.Length-4)

Write-Host "Latest list file identified successfully"

}

catch {

Write-Error "Failure identifying latest list file"

}

try {

[void](Get-AzStorageBlobContent -Container $blobContainer `

-Blob $($LatestList.Name) -Context $storageContext `

-Destination "$($temppath)\" -Force)

$LatestListContent = Get-Content "$($tempPath)\$($LatestList.name)"

Write-Host "Latest list loaded successfully"

}

catch {

Write-Error "Failure loading the file content"

}

#Get list of the date to compare to

try {

$OldList = Get-AzStorageBlob -Container $blobContainer `

-blob "$($OldListDate).txt" -context $storageContext

[void](Get-AzStorageBlobContent -Container $blobContainer -Blob $OldList.Name `

-Context $storageContext -Destination $tempPath -Force)

$OldListContent = Get-Content "$($tempPath)\$($OldList.name)"

Write-Host "Old list loaded successfully"

}

catch {

Write-Error "File not found"

}

#Run the comparison

try {

$comparison = Compare-Object $OldListContent $LatestListContent `

| where-object -property SideIndicator -eq =>

$output = $comparison | Select-Object @{N='New Tags'; E={$_.InputObject}} `

| ConvertTo-Html

Write-Host "Comparison was done successfully"

}

catch {

Write-Error "Comparison failed"

}

#Form output content

try {

$htmlBody = @"

<body>

<p>Results of Cost Center list comparison from $OldListDate with

latest list generated on $BaseLatestFileName</p>

<p>$output</p>

</body>

"@

Write-Host "Output content was formed successfully"

}

catch {

Write-Error "Forming output content failed"

}

#Function body end

}

#Define function output

Push-OutputBinding -Name Response -Value ([HttpResponseContext]@{

StatusCode = [HttpStatusCode]::OK

ContentType = "text/html"

Body = $htmlBody

})

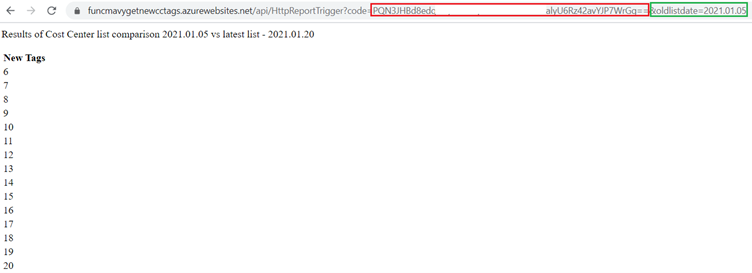

Once I publish this function code in the function, I will get the function URL with its key. This URL will be used to trigger the report.

User experience

Now when we went through the implementation code let us look at the end user experience.

Once user will enter the function URL also passing the “oldlistdate” parameter he will get back the report (the red marked is the function key and the green marked is the oldlistdate parameter):

All this might not seem a big thing, but using techniques described we can cover variety of needs. We could adjust the function to generate reports by email on regular intervals. Reports could contain point in time results of the Azure Graph queries we are interested in, what would make the function code even more simple. Report data could populate a simple Database which could be connected to PowerBI visual dashboards. Opportunities are endless and the good thing is the more you will practice the easier it will become to build your own logic. Good luck with your automation initiatives!